Rapid Read • 8 min read

Judge Henry Wingate of the Southern District of Mississippi recently faced scrutiny after issuing a temporary restraining order (TRO) that contained multiple errors. The TRO, which aimed to block enforcement of a Mississippi state law prohibiting certain DEI programs in public educational institutions, mistakenly listed non-parties as plaintiffs, misquoted the complaint and legislation, and cited a non-existent case. These errors prompted the Mississippi Attorney General's Office to file a motion for clarification, leading Judge Wingate to replace the order. While it is not confirmed that AI was responsible for these mistakes, the situation resembles a previous incident involving Judge Julien Xavier Neals, where AI-generated research led to errors in a securities case ruling.

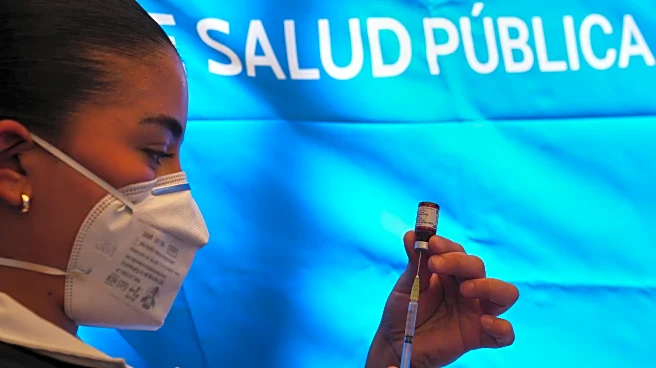

AD

The errors in Judge Wingate's ruling highlight the potential pitfalls of using AI in judicial processes. As AI technology becomes more integrated into legal research and decision-making, the accuracy and reliability of AI-generated content are critical. Mistakes like these can undermine public trust in the judiciary and complicate legal proceedings. The incident underscores the need for careful oversight and validation of AI tools in legal settings to prevent similar occurrences. It also raises questions about the balance between technological advancement and maintaining the integrity of judicial processes.

The legal community may see increased scrutiny and calls for guidelines on the use of AI in judicial settings. Judges and legal professionals might need to undergo training to better understand AI tools and their limitations. Additionally, there could be discussions on implementing checks and balances to ensure AI-generated content is thoroughly reviewed before being used in official rulings. Stakeholders, including legal tech companies, may collaborate to improve AI systems to prevent errors and enhance their reliability in legal contexts.

The incident with Judge Wingate may prompt broader discussions on the ethical implications of AI in the judiciary. Concerns about accountability, transparency, and the potential for bias in AI systems could lead to debates on the role of technology in legal decision-making. Long-term, this could influence how AI is integrated into other areas of public policy and governance, emphasizing the need for ethical frameworks and standards.

AD

More Stories You Might Enjoy