Inference Speed Imperative

OpenAI is reportedly experiencing dissatisfaction with certain Nvidia chips, particularly concerning the speed of AI inference, the process where AI models

generate responses to user queries. This has prompted the company to investigate alternative hardware solutions since last year, a move that could significantly impact the strong relationship between OpenAI and Nvidia, two leading entities in the current artificial intelligence boom. The core of this dissatisfaction appears to stem from the performance of chips dedicated to specific elements of AI inference. While Nvidia continues to dominate the market for chips used in training large AI models, the inference stage has emerged as a critical new battleground for technological competition. This shift in focus by OpenAI and others towards optimizing inference chips represents a significant challenge to Nvidia's established dominance in the AI hardware sector, especially at a time when investment talks between the two companies are ongoing. The initial, substantial investment talks between Nvidia and OpenAI, aimed at a potential $100 billion infusion from Nvidia and a stake in the startup, have faced protracted negotiations, suggesting complexities beyond straightforward hardware acquisition.

Hardware Architectures Compared

The limitations OpenAI perceives in current Nvidia and AMD GPU technology are largely attributed to their reliance on external memory modules. This architectural design introduces additional processing time as data must be fetched from these separate components, consequently slowing down the responsiveness of AI applications like chatbots, where user interaction speed is paramount. In contrast, the quest for faster inference is driving interest in chips that integrate a substantial amount of memory directly onto the same silicon chip as the processing units. This approach, often referred to as embedding memory like SRAM (Static Random-Access Memory) onto the chip, can yield significant speed advantages. For AI systems handling millions of user requests simultaneously, reducing the physical distance and time required to access data is crucial. The inference process inherently demands more memory access compared to the training phase, as chips spend a greater proportion of their time retrieving data rather than executing complex mathematical operations. This architectural difference makes on-chip memory solutions highly attractive for accelerating the real-time performance demanded by advanced AI services and applications.

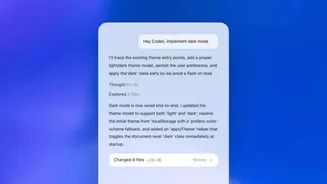

Strategic Partnerships Explored

OpenAI has been actively exploring collaborations with emerging companies that specialize in advanced chip designs, including Cerebras and Groq, with the aim of improving inference speeds. For instance, OpenAI's Codex product, designed for generating computer code, has reportedly faced performance challenges that internal staff have partially attributed to the limitations of Nvidia's GPU-based hardware. OpenAI's CEO, Sam Altman, has emphasized the critical importance of speed for users engaging with their coding models, highlighting the premium customers place on rapid code generation. In line with this, OpenAI has pursued agreements with companies like Cerebras, whose hardware is tailored to meet these demanding speed requirements, a strategy that has resulted in a commercial partnership announced recently. Competitors like Google and Anthropic are also leveraging specialized hardware; Google's Tensor Processing Units (TPUs) are specifically engineered for inference tasks, offering potential performance benefits over general-purpose AI chips. This competitive landscape further motivates OpenAI to secure hardware that provides a distinct advantage in speed and efficiency for its AI services.

Nvidia's Competitive Response

In response to OpenAI's exploration of alternative inference hardware, Nvidia has actively sought to bolster its own technological portfolio and competitive position. The chip giant has reportedly approached companies developing chips with significant on-chip memory, such as Cerebras and Groq, signaling interest in potential acquisitions. While Cerebras declined an acquisition offer and instead solidified a commercial agreement with OpenAI, Nvidia proceeded to license Groq's technology through a non-exclusive, all-cash deal valued at $20 billion. This strategic move by Nvidia to acquire key intellectual property and talent from Groq appears aimed at enhancing its capabilities in the rapidly evolving AI industry, particularly in areas where general-purpose GPUs might fall short. Although the licensing agreement allows other entities to utilize Groq's technology, Groq is reportedly shifting its focus towards cloud-based software solutions, partly due to Nvidia's successful recruitment of Groq's chip designers. Nvidia maintains that Groq's intellectual property is highly complementary to its existing product roadmap, underscoring its commitment to maintaining leadership in the AI chip market across both training and inference.