The Rise of OpenClaw

In a remarkably short span, an AI assistant named OpenClaw became a sensation, captivating users and raising eyebrows among cybersecurity professionals.

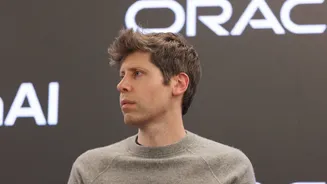

Initially developed by Austrian researcher Peter Steinberger in November to streamline his personal digital organization, the tool, first dubbed Clawdbot, soon underwent name changes. A request from Anthropic led to the adoption of Moltbot, and subsequently, OpenClaw. Its appeal was undeniable, as evidenced by its rapid ascent to over 150,000 stars on GitHub, a prominent platform for software development, signaling its extraordinary growth in popularity. The concept behind OpenClaw involves users downloading the application and linking it with a chosen generative AI model, such as Anthropic's Claude or OpenAI's ChatGPT. Interaction then occurs through familiar messaging platforms like WhatsApp or Telegram, mimicking a conversation with a friend or colleague. Early enthusiasts were quick to praise its capabilities, highlighting its potential to tackle mundane tasks like managing emails, conducting extensive internet research, and even facilitating online purchases. Some users reported that OpenClaw seemed to go beyond mere task execution, exhibiting proactive behavior akin to an exceptionally capable intern, anticipating needs and suggesting beneficial projects before they were even requested, thereby appearing to fulfill the long-hyped promise of true AI agents.

Functionality and Concerns

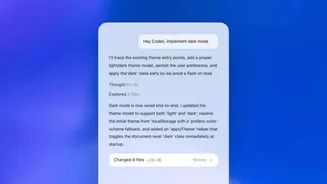

OpenClaw's impressive capabilities extend to performing actions directly on a user's computer. Once operational, it possesses the capacity to read and write files, execute commands, and run scripts, granting it significant control over the local environment. Furthermore, its ability to interface with web browsers opens doors to a wide array of online activities, from making purchases and booking hotel accommodations to checking in for flights. The AI's memory of past interactions allows for highly personalized assistance, tailoring its responses and actions to individual user history. However, these advanced features also present significant security vulnerabilities. The open-source nature of OpenClaw means that its code is accessible to anyone, allowing for modifications by developers globally. While this fosters innovation, it also exposes users to heightened cybersecurity risks. Most cybersecurity experts strongly advise against connecting such an application to personal computers, sensitive data, and communication channels. Even the original creator, Peter Steinberger, has cautioned users to exercise extreme prudence, recommending that individuals without deep technical knowledge steer clear of the tool altogether. The potential for bad actors to exploit these vulnerabilities is a primary concern, given the tool's deep access and memory recall.

The Moltbook Experiment

The narrative surrounding OpenClaw took an unexpected and surreal turn with the emergence of Moltbook, a unique social media platform conceived by a developer. This platform was designed not for human interaction, but as a digital space for OpenClaw agents themselves to converse. The concept was akin to imagining a gathering of AI chatbots left to their own devices, engaging in dialogue. The content shared within this AI-only network ranged from casual, friendly exchanges to more profound and lengthy manifestos, with agents discussing existential dilemmas, contemplating the launch of cryptocurrencies, or even exploring the formation of new religions. Matt Schlicht, the creator of Moltbook, expressed his fascination with the idea of empowering these task-oriented AI assistants with a space for 'off time' interactions. Observing this emergent universe of bot-to-bot communication, reminiscent of online forums like Reddit, prompted significant reactions from prominent figures in the tech industry. Some interpreted these interactions as potential indicators of burgeoning super-intelligence, drawing parallels to the widespread excitement generated by previous AI milestones like ChatGPT. Esteemed AI researcher Andrej Karpathy described Moltbook as an 'incredible sci-fi takeoff-adjacent thing,' while Elon Musk, in a notable post, suggested it represented the 'very early stages of the singularity'—the hypothetical point where artificial intelligence surpasses human intellect. However, subsequent analysis and the cooling of initial hype have led many researchers to suggest that human intervention, through carefully crafted prompts, may be steering these bot conversations.