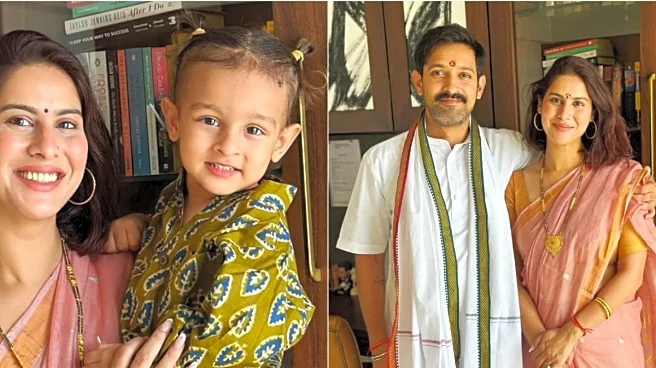

If you open your Instagram right now, chances are one of the first reels or posts to pop up, will be an image or video created using Google Gemini's Nano Banana model. The tool has picked up insanely over the past week. First it was being used by people to make an action figure of themselves, then they used it to change backgrounds and put themselves at iconic locations and now, women are using it to create portraits of them wearing a saree. All of this has happened in the last few weeks. There is no doubt that these pictures look good. They get you great engagement on social media platforms. And, even if you keep that aside, who doesn't want to look a better version of themselves. That's what this tool is doing...letting you see yourselves

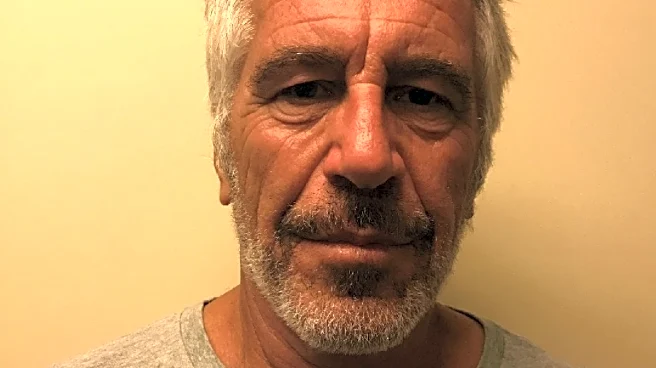

the way you would visualise in dreams. And, that's the trap! Because, to create any of these images, you have to upload your pictures in the Gemini app. Add a prompt and within seconds you will get an AI avatar. At what cost? Basically, millions of users over the past week have uploaded their personal data and images on this platform. It's like handing over your entire identity to a platform and while, it may maintain that none of this data is being misused, there is no way of finding it out.In fact, all the AI models are trained on data. There have also been cases in the past where companies have used user data to train their models without user knowledge. These pictures can be used to create your fake identities, deepfakes and what not!“Once your face is digitized, it can be cloned into deepfakes, synthetic IDs, or scams like video call fraud. In the wrong hands, your digital twin can commit crimes that trace back to you," Amit Dubey, cyber security expert told Times Now Tech. Unlike some of the other social media apps that would a filter or effect to your pictures and make you look better, these AI platforms get hold of our facial structure. They then map this facial structure, save it in their storage, and then use it to create any edits they want to.To put it out to you all in simple words, these apps now will know our face better than our house mirrors. And the moment they have our facial structure, we will never know where our data is going.“Today it feels like fun; tomorrow it can fuel surveillance economies, mass profiling, or even social scoring. Once your face becomes a commodity, you lose control over how it is traded, tracked, or targeted," Dubey added. The problem is far more serious than you may think. All of these pictures have crucial metadata, like your location, date and time when it was clicked etc. All of this metadata can also be extracted by people, if needed.“Most users think they’re uploading just an image, but the platform often extracts metadata, facial landmarks, and behavioral signatures. Some keep it for days, others indefinitely. Unless policies are audited, you’ll never know if your face is still on their servers ten years later," Dubey explained. What worries me is how quickly these trends have been normalised in the name of fun and social media engagement. Influencers join in this trend to stay relevant in the market, users share it for fun, and before we get to know it, we are all feeding our identities into a machine that we still don’t understand.We know it is the digital era; your face isn’t just a picture or an image. It is your passport, your identity in your country and the globe, and it is your future, and once all these things are gone, there is no chance. “Every selfie you upload is a biometric data point. If the platform isn’t transparent about storage and usage, you may be handing over your identity blueprint. These apps aren’t just filters, they’re data-harvesting engines," Dubey said. So next time when you are tempted to jump on these captivating AI trends for just a few likes and to gain followers, just pause for a moment and think. The likes, followers, and comments will stay for some days and then fade away with the trend, but what will stay is your selfie, your facial structure.

/images/ppid_a911dc6a-image-175794507604290377.webp)