“We love working with NVIDIA and they make the best AI chips in the world. We hope to be a gigantic customer for a very long time,” Altman wrote in a post on X Tuesday, adding that “I don't get where all this insanity is coming from.”

Altman’s remarks came after a Reuters report, citing sources familiar with the matter, said OpenAI had grown dissatisfied with aspects of Nvidia’s latest AI chips — particularly for inference workloads — and had been exploring alternatives since last year.

Inference in focus

According to Reuters, OpenAI’s concerns centre on AI inference — the stage when trained models generate responses to user queries — rather than training, where Nvidia continues to dominate with its graphics processing units (GPUs).

According to the report OpenAI was not fully satisfied with the speed at which Nvidia’s hardware could deliver answers for specific use cases such as software development and AI-to-software communication. One source said OpenAI was seeking new hardware that could eventually meet about 10 per cent of its inference computing needs.

Inference has emerged as a new battleground in the AI arms race. While Nvidia’s GPUs have become the gold standard for training large models like GPT, inference requires chips optimised for memory access and rapid response times, particularly as AI tools scale to millions of users.

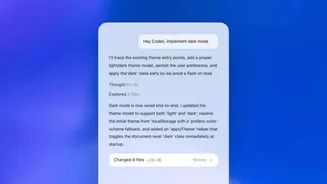

Inside OpenAI, the issue reportedly became particularly visible in Codex, its code-generation product, where staff attributed some performance limitations to GPU-based hardware, the report said.

On a January 30 call with reporters, Altman said that customers using OpenAI’s coding models “will put a big premium on speed for coding work,” adding that performance expectations vary between enterprise coding tools and casual ChatGPT usage.

Deals, delays and diversification

The report also said OpenAI had explored partnerships with Advanced Micro Devices (AMD), Cerebras and Groq for inference chips designed with large amounts of on-chip memory (SRAM), which can offer speed advantages over traditional GPU architectures that rely more heavily on external memory.

OpenAI last month announced a commercial deal with Cerebras aimed at boosting inference speed. Reuters reported that Nvidia subsequently signed a $20-billion non-exclusive licensing deal with Groq, a move industry executives viewed as an effort to strengthen its technology portfolio amid intensifying competition.

The developments come against the backdrop of a proposed investment by Nvidia of up to $100 billion in OpenAI, first disclosed in September. The deal, which would give Nvidia a stake in the AI startup and provide OpenAI with capital to buy advanced chips, was initially expected to close within weeks but has since faced delays, Reuters said.

Nvidia CEO Jensen Huang, however, dismissed talk of tensions over the weekend, calling such reports “nonsense” and reiterating plans for a significant investment in OpenAI.