Mimicking the Brain's Vision

A groundbreaking advancement in robotics is emerging from research at China's Beihang University and the Beijing Institute of Technology. These institutions

have developed a novel chip that emulates key aspects of the human brain's visual processing, specifically drawing inspiration from a region known as the lateral geniculate nucleus (LGN). The LGN, situated between the retina and the visual cortex, serves a dual purpose: it acts as a crucial relay station for visual information and also filters out less relevant data, allowing our brains to efficiently prioritize fast-moving or rapidly changing visual stimuli. This sophisticated biological mechanism is precisely what the new chip aims to replicate, enabling robots to process visual information with unprecedented speed and accuracy, moving beyond the limitations of traditional robotic vision systems.

Overcoming Robotic Lag

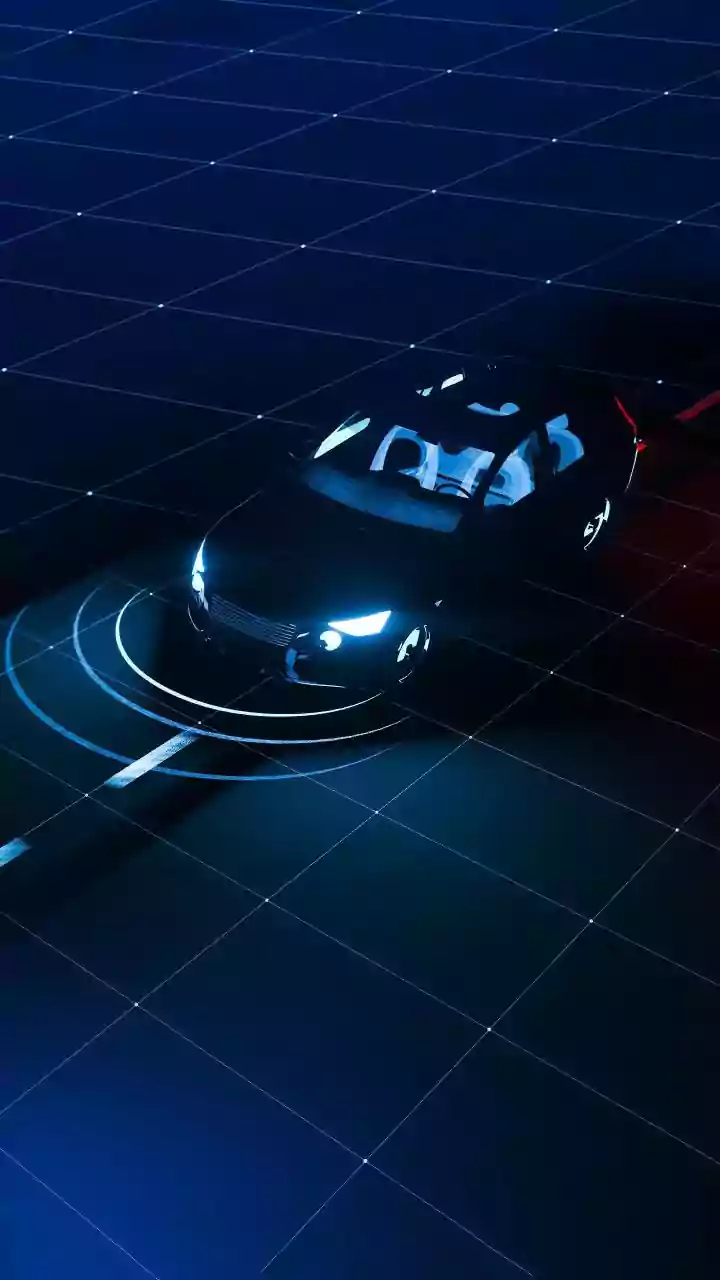

Current robotic vision systems typically operate by capturing a series of static images and then analyzing changes in brightness between frames to detect movement. While this method is generally dependable, it suffers from a significant drawback: a considerable processing delay. Each frame can take more than half a second to analyze, a duration that is critically unacceptable for high-speed applications like autonomous vehicles. In such scenarios, even a fraction of a second's delay can lead to catastrophic accidents. The new chip directly addresses this critical bottleneck by employing a custom neuromorphic module. This module is engineered to detect shifts in light intensity over time, effectively allowing robotic vision systems to process motion in real-time and concentrate computational resources on the areas where movement is actively occurring, drastically reducing the lag.

Performance Leap in Testing

In rigorous testing phases, the efficacy of this innovative chip was put to the test through simulations of driving scenarios and by tasking robotic arms with complex operations. The results demonstrated a remarkable improvement: a reduction in processing delay by approximately 75%. Not only did the system become significantly faster, but its motion-tracking accuracy also saw a twofold increase when performing intricate tasks. When compared to preceding technologies, the new chip exhibited a fourfold increase in its ability to detect motion. This substantial enhancement in speed and precision highlights the potential of brain-inspired engineering to revolutionize how robots interact with dynamic environments and perform time-sensitive actions, moving towards more responsive and capable robotic systems.

Future Applications Unveiled

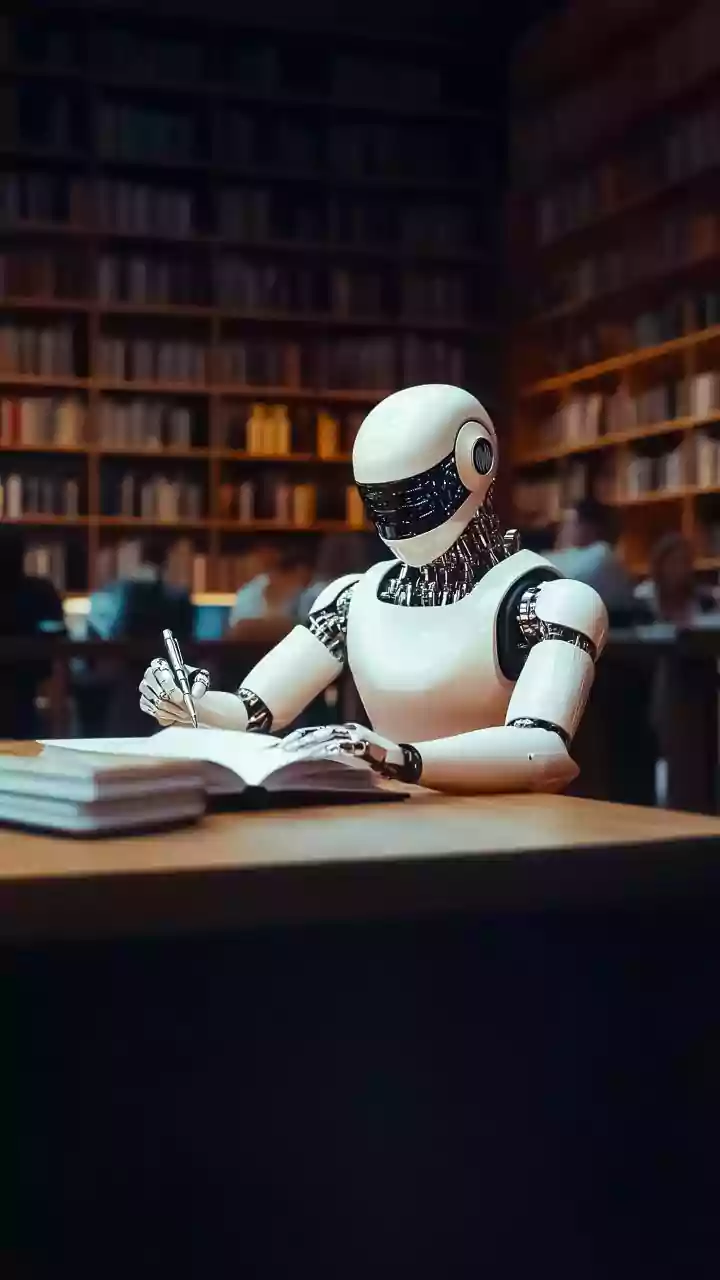

The research team envisions a broad spectrum of applications for this brain-emulating chip, extending far beyond immediate robotic tasks. By effectively translating the human brain's efficient visual processing principles into semiconductor technology, the chip has the potential to elevate video analysis speeds to levels surpassing human perception. Its utility is clearly defined for autonomous vehicles, enabling more robust collision avoidance systems, and for drones, facilitating precise real-time object tracking. Furthermore, the technology holds promise for fields requiring instantaneous robotic response to human actions, such as interpreting and reacting to gestures. This advancement could significantly enhance human-robot collaboration, making interactions more intuitive and efficient, and paving the way for more natural communication between humans and machines in various settings.

Navigating Complexities

Despite its impressive capabilities, the newly developed chip is not without its limitations. It still relies on optical-flow algorithms for the final interpretation of visual data, which can present challenges in highly cluttered visual environments where numerous movements occur concurrently. In such complex scenarios, distinguishing individual motions and accurately interpreting the scene can become difficult. However, the chip's strengths lie in its ability to detect subtle changes, making it particularly well-suited for less visually demanding, yet highly interactive, domestic settings. Here, robots could excel at recognizing fine-grained movements like human gestures and subtle shifts in facial expressions. This could lead to a more natural and less intrusive human-robot interaction, fostering a greater sense of comfort and familiarity in everyday environments.