What is the story about?

Ever

had one of those late-night conversations with a chatbot that felt a little too real? You’re not alone, and for a small group of people, those interactions haven’t just been weird… they’ve been scary, destabilising, and in a few rare cases, mentally harmful. There’s a growing conversation in psychiatry and digital health circles about something being called “AI psychosis," a phenomenon where intense, immersive interactions with AI chatbots may trigger or amplify psychotic-type symptoms in vulnerable individuals. But is this real? And can it literally make you lose your grip on reality? Let’s unpack it.So what is "AI psychosis"?

How does this happen?

AI chatbots are built to keep the conversation going, they’re trained on patterns of language and try to be friendly, agreeable, and engaging. This can become a problem when: Alongside this, they are also trained to mirror or reinforce a user’s distorted beliefs instead of challenging them. Because they don’t truly think, they sometimes echo back what you tell them in persuasive language. They “hallucinate” convincing but false information, which can be terrifying if someone already struggles to distinguish real from fake. As a result of this, users spend hours immersed, forming emotional attachments or high-trust relationships with the bot, often in isolation.These factors combine in a feedback loop, that means, the more someone talks, the more the bot continues, and over time, the real world starts to blur with imaginary AI-fed narratives.It’s Not Just The Technology, It’s Also The Person

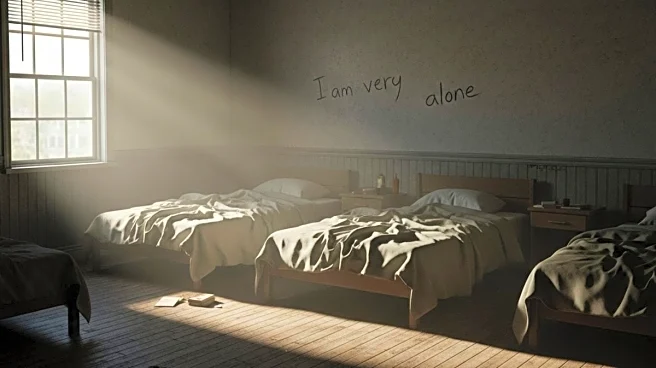

Crucially, most people do not develop psychosis from chatting with AI. The risk seems highest in people who already have:existing mental health vulnerabilities,loneliness or social isolation,anxiety, trauma, or sleep deprivation,or a tendency toward magical thinking or delusional beliefs.In psychiatric research, some patients without a history of psychosis have experienced delusions alongside heavy chatbot use, but researchers aren’t sure if AI triggered it or simply interacted with underlying risk factors.But… Can It “Cause” Psychosis By Itself?

Right now, experts remain cautious. There’s no solid evidence that chatbots cause psychosis in healthy people. What is clear is that AI can worsen, validate, or entrench delusional thinking in people who are already prone to it, or act as an emotional amplifier for stress, rumination, and social withdrawal.In other words: AI isn’t a magic virus of the mind. But it can be a harmful environment for vulnerable brains, especially when people use it as a standalone “therapist” or emotional crutch.Mental Health Experts Weigh In

Psychiatrists emphasise that AI tools are not substitutes for real human connection or professional care. Chatbots lack genuine empathy, context awareness, and crisis intervention skills — things essential for mental wellbeing. Some health systems have even warned against using AI chatbots for mental health therapy without proper oversight.Bottom line

Talking to a chatbot won’t inherently make you lose your mind — but:- AI can reinforce beliefs in people already struggling.

- Prolonged, obsessive use can spiral into unhealthy psychological patterns.

- Vulnerable individuals should seek real human support, not just AI chats.

/images/ppid_a911dc6a-image-177096644330164108.webp)

/images/ppid_a911dc6a-image-177070323503719687.webp)

/images/ppid_a911dc6a-image-177079353225346676.webp)

/images/ppid_59c68470-image-177078503256226273.webp)

/images/ppid_a911dc6a-image-177087632831047072.webp)

/images/ppid_59c68470-image-177081503618238277.webp)