What's Happening?

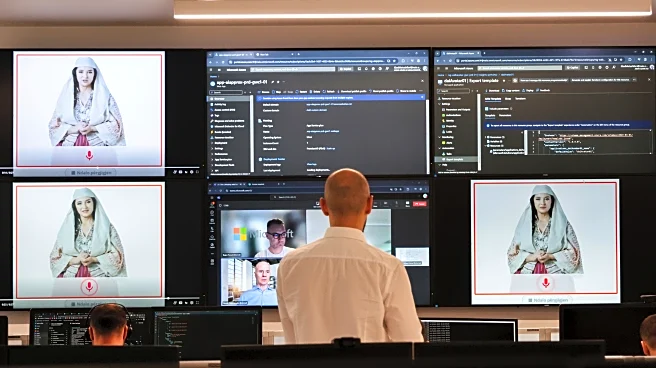

OpenAI is rolling out an age verification system for its ChatGPT platform, following a similar move by Discord. The system aims to ensure compliance with safety regulations by predicting users' ages based on their usage patterns. If the system cannot verify a user's age, it will require additional verification through a third-party service, Persona, which involves providing a government ID and a live selfie. This initiative is part of OpenAI's broader effort to implement safety features that restrict access to certain types of content, such as graphic violence and harmful behavior, unless users verify their age.

Why It's Important?

The introduction of age verification by OpenAI reflects a growing trend among tech companies to comply with regulatory demands for increased

online safety. However, this move raises significant privacy concerns, as it involves collecting and analyzing user data to predict age. Critics argue that such measures could lead to a loss of anonymity and increased data security risks. The situation highlights the ongoing tension between regulatory compliance and the protection of user privacy, a challenge that many tech companies are likely to face as similar laws are enacted globally.

What's Next?

As OpenAI implements its age verification system, it may face scrutiny from privacy advocates and potential legal challenges. The company will need to navigate the balance between regulatory compliance and user privacy, a task that could influence its reputation and user trust. Additionally, the move could prompt other tech companies to adopt similar measures, further shaping the landscape of digital privacy and regulation. OpenAI's approach to these challenges will be closely watched by industry stakeholders and could set a precedent for future regulatory compliance efforts.