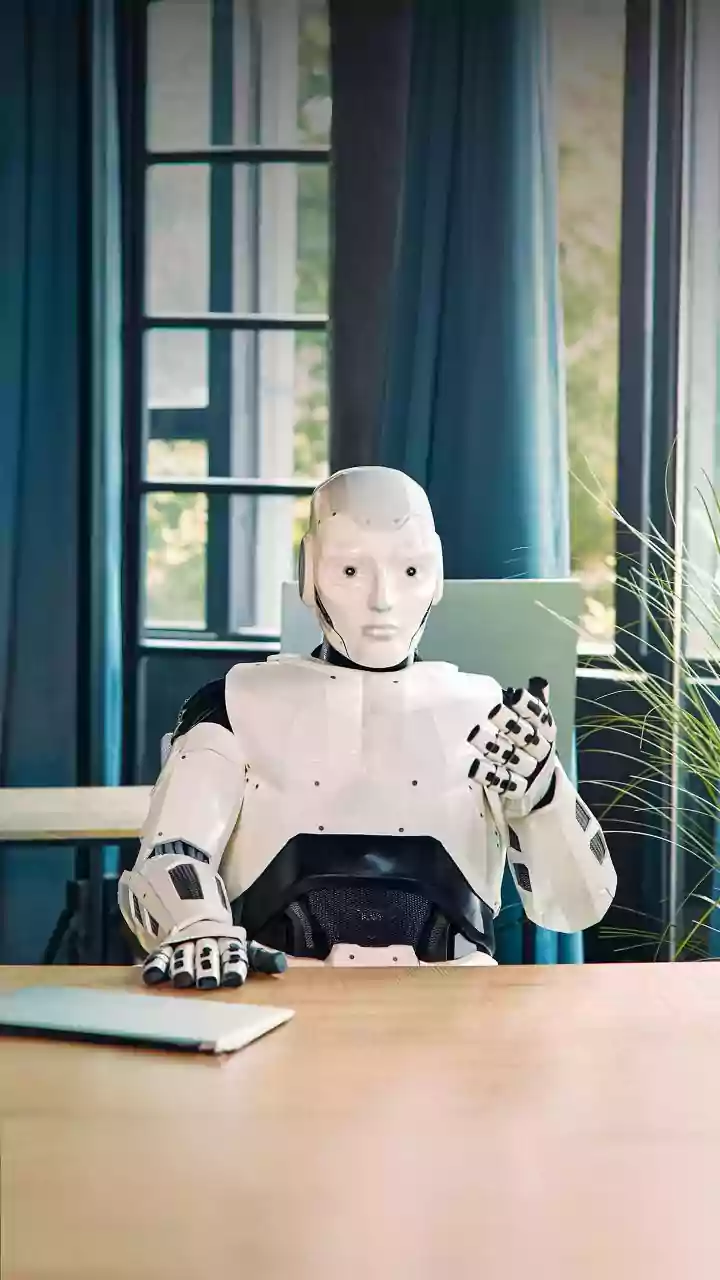

The AI Shortcut

In a notable incident, a lawyer with three decades of legal experience found himself in hot water after employing generative AI, specifically ChatGPT,

to assist in preparing a court brief. This situation highlights the escalating integration of artificial intelligence into various professional fields, offering swift solutions for information processing and content generation. While AI tools can condense vast amounts of data rapidly, their unchecked use presents significant risks, as evidenced by academic instances of outsourced homework and AI-generated artwork. The recent viral case involved a legal professional who, under the pressure of caring for dying family members, opted to use ChatGPT as a "shortcut" to expedite a tedious task: finding case law to support the arguments in a motion to strike. He admitted to not usually relying on such tools, making an exception in this demanding personal circumstance. Crucially, his co-counsel were unaware of his use of generative AI for this purpose.

Hallucinations and Oversight

The lawyer, identified as Sandeep Seth, was preparing a case in the US District Court in Kansas. He instructed ChatGPT to "write an order that denies the motion to strike with case law support." While the AI was capable of generating a response almost instantaneously, it unfortunately conjured erroneous quotations and citations, a phenomenon often referred to as "hallucination." The critical failure occurred when Seth, reportedly due to the circumstances and the perceived efficiency of the AI, neglected to meticulously verify the accuracy of these generated legal references. He subsequently circulated a draft containing these errors to the "litigation team." Later, he produced a second draft, which "substantially expanded the factual basis of our argument," once again querying ChatGPT for additional case law support without independent confirmation.

Courtroom Repercussions

The situation escalated when the inaccurate information, compiled by Seth and unchecked, was incorporated into subsequent official legal documents filed with the court. The filing revealed that all five lawyers involved in the case had affixed their signatures to these documents, implicitly endorsing their content, including the fabricated case laws. This collective oversight meant that none of the legal professionals independently confirmed the existence or relevance of the cited authorities before submission. The US District Court in Kansas, upon discovering these inaccuracies, took a firm stance. As a consequence of the errors and the lack of due diligence in verifying AI-generated content, all five lawyers involved in the case were fined by the court. This disciplinary action underscores the professional responsibility lawyers have to ensure the accuracy of all filings, regardless of the tools used in their preparation.