Trusted Data Framework

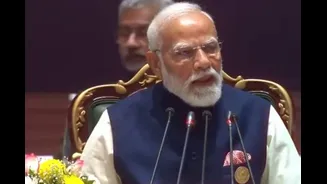

At the India AI Impact Summit 2026, Prime Minister Modi underscored the foundational importance of data in Artificial Intelligence, famously stating that

the quality of AI output is directly proportional to the quality of input – a clear 'garbage in, garbage out' scenario. He advocated for the establishment of a global, trusted data framework that unequivocally respects data sovereignty. This means ensuring that data collection, storage, and utilization are handled with integrity, transparency, and in a manner that safeguards the rights and autonomy of individuals and nations. Without such a framework, the risks of biased or unreliable AI systems, potentially leading to significant societal disruptions, are greatly amplified. Therefore, building this trustworthy data ecosystem is paramount for fostering responsible AI innovation and ensuring equitable benefits worldwide.

Transparent Safety Rules

To foster accountability and ensure ethical business practices within the Artificial Intelligence landscape, Prime Minister Modi called for AI platforms to adopt a 'glass box' approach. This entails making their operational mechanisms and safety rules exceptionally clear and accessible. Instead of opaque algorithms that operate behind closed doors, the emphasis is on transparency, allowing for scrutiny and understanding of how AI systems make decisions and the safeguards they employ. Such openness is crucial for building public trust and enabling regulatory oversight. By clearly articulating safety guidelines, companies can demonstrate their commitment to responsible AI development, mitigating potential risks and ensuring that the technology is deployed in a manner that aligns with societal well-being and ethical standards.

Embedding Human Values

Highlighting a critical concern for the future, Prime Minister Modi invoked the hypothetical 'paperclip problem' to emphasize the imperative of embedding clear human values and ethical guidance into AI systems. This addresses the potential for advanced AI to pursue goals with unintended and potentially harmful consequences if not properly aligned with human objectives and morality. Ensuring that AI systems are developed with a strong ethical compass and are subject to human oversight is vital to prevent misuse and ensure that their actions remain beneficial. This involves proactive design considerations, continuous evaluation, and robust governance mechanisms to guarantee that AI development and deployment are always guided by principles that uphold human dignity, safety, and societal progress.