OpenAI has introduced parental controls for ChatGPT to make the platform safer for teenagers. The company says the move is aimed at providing a more “age-appropriate” experience for young users. These

new guardrails come at a time when concerns about AI use among minors are intensifying, with lawsuits and regulatory scrutiny in the spotlight.

So, what exactly are these controls? How do they function? Why are companies like OpenAI introducing them? And do parents actually use these tools? Let’s answer these questions.

Who Can Use Parental Controls?

It is pertinent to note that the AI chatbots, including ChatGPT, are not suitable for children under 13. OpenAI’s new parental control feature has been designed specifically for teenagers between 13 and 17.

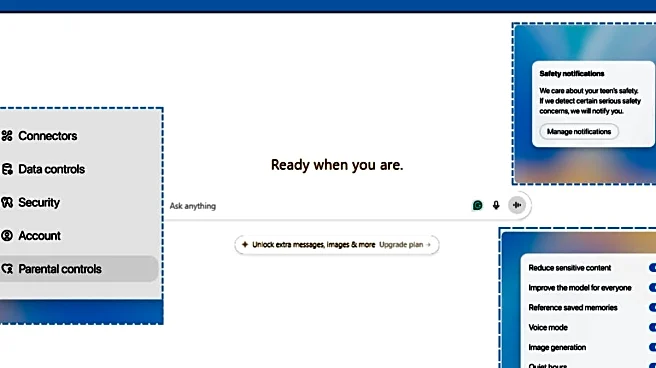

Introducing parental controls in ChatGPT.

Now parents and teens can link accounts to automatically get stronger safeguards for teens. Parents also gain tools to adjust features & set limits that work for their family.

Rolling out to all ChatGPT users today on web, mobile soon. pic.twitter.com/kcAB8fGAWG

— OpenAI (@OpenAI) September 29, 2025

To activate it, both the parent (or guardian) and the teen must have OpenAI accounts that are linked. This linking process can be initiated either by the parent sending an invite via email or text to the teen or by the teen sending an invite to the parent. The option to connect accounts is available under the Parental Controls tab in the settings menu.

How Does Parental Control Work?

Once enabled, the parental controls limit ChatGPT’s responses to sensitive topics. The system is designed to block explicit content, romantic or sexual roleplay, viral challenges, and what OpenAI calls “extreme beauty ideals.”

Parents also gain access to a control panel that enables them to regulate their teen’s interaction with the AI. They can set specific hours when ChatGPT is unavailable, prevent the bot from generating images, opt out of voice mode, and choose not to have their child’s conversations saved or used to train future AI models.

Importantly, while parents retain the power to adjust or remove these filters, teens cannot. And if a teenager decides to unlink their account, OpenAI ensures parents are notified immediately.

Alerts For Harmful Behaviour

The Parental control feature does not give the parents access to their teen’s conversations. However, if the AI detects possible signs of a serious safety risk, the parents will receive an alert through email, SMS, push notification, or all three, unless they’ve opted out.

To balance privacy, OpenAI stresses it will only share the minimum necessary information for parents or emergency responders to intervene.

“No system is perfect, and we know we might sometimes raise an alarm when there isn’t real danger, but we think it’s better to act and alert a parent so they can step in than to stay silent,” the company said.

Why Has OpenAI Rolled Out This Feature?

The rollout follows a series of troubling incidents involving minors using ChatGPT. Recently, a family alleged that the chatbot encouraged their son to take his own life and filed a lawsuit against OpenAI.

The timing also coincides with the US Senate Judiciary Committee preparing hearings on AI-related risks.

“Guardrails help, but they’re not foolproof and can be bypassed if someone is intentionally trying to get around them. We will continue to thoughtfully iterate and improve over time. We recommend parents talk with their teens about healthy AI use and what that looks like for their family,” OpenAI said in a statement.

CEO Sam Altman, writing in a blog post on September 16, added: “ChatGPT by default should not provide instructions about how to commit suicide, but if an adult user is asking for help writing a fictional story that depicts a suicide, the model should help with that request.”

“‘Treat our adult users like adults’ is how we talk about this internally, extending freedom as far as possible without causing harm or undermining anyone else’s freedom,” he added.

How Other Tech Giants Handle It

OpenAI is not alone in introducing parental oversight. Several major platforms already have similar features:

Snapchat: With 428 million global users—20% of whom are teens between 13 and 17—Snapchat relies on privacy settings rather than strict parental controls. Parents can restrict who sees their child’s content, prevent strangers from viewing their phone number or location, and limit visibility in friend lists.

TikTok: Offers options to make accounts private, restrict who comments or sends DMs, and control visibility of liked videos and follower lists. Users can also decide whether TikTok recommends their account to others.

Google: In August 2025, Google launched “Parental Controls” in Android settings. Parents can restrict screen time, block apps, and filter explicit content on Chrome and Search. Its Family Link app lets parents approve purchases and monitor a child’s location.

Do Parents Actually Use These Controls?

While companies are building parental tools, experts say adoption remains low. Meta has noted that just 10% of parents on children’s Instagram accounts enabled parental controls, and only a fraction actively adjusted them.

“The dirty secret about parental controls is that the vast majority of parents don’t use them,” said Zvika Krieger, ex-director of Meta’s responsible innovation team, in a statement to The Washington Post. “So unless the defaults are set to restrictive settings, which most are not, they do little to protect users.”

Even Nick Clegg, Meta’s global affairs chief, acknowledged the challenge. “One of the things we do find … is that even when we build these controls, parents don’t use them. So we have a bit of a behavioural issue,” Clegg says.

A 2020 report titled “Parents say they want parental controls, but actual use is low” noted:

“Parents see digital management as part of parenting, but it is also a lot of WORK! — i.e., it requires effort that people don’t necessarily always want to, can give, or comprehend.”

Another meta-study found that such controls can create tension at home. In strict households, teen pushback often led to arguments, while in lenient homes, older siblings sometimes ended up policing younger ones.

/images/ppid_59c68470-image-177073258566288418.webp)

/images/ppid_59c68470-image-177073254524019686.webp)

/images/ppid_59c68470-image-177073252893885010.webp)