What's Happening?

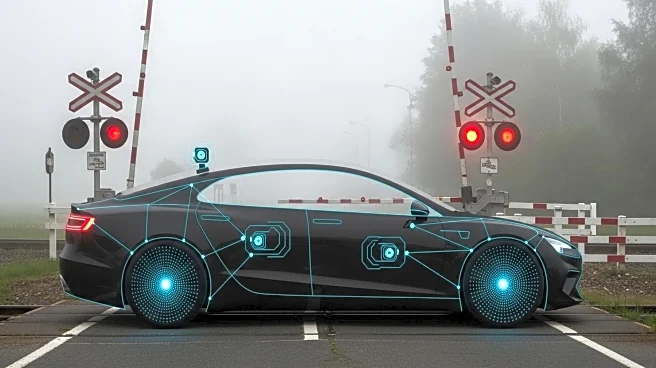

Tesla's Full Self-Driving (FSD) software is under scrutiny following reports of vehicles failing to stop at railroad crossings, posing significant safety risks. According to NBC News, several Tesla drivers have experienced their cars malfunctioning near railroad tracks, with some narrowly avoiding collisions with oncoming trains. The National Highway Traffic Safety Administration (NHTSA) has acknowledged these incidents and is in communication with Tesla regarding the issue. Despite the software's name, it is not fully autonomous and requires constant human supervision. The California DMV has previously sued Tesla for false advertising over the FSD's capabilities, leading to a rebranding as 'Full Self-Driving (Supervised)'.

Why It's Important?

The safety concerns surrounding Tesla's FSD software highlight the challenges and potential dangers of autonomous vehicle technology. These incidents raise questions about the reliability of Tesla's self-driving capabilities, which could impact consumer trust and the company's reputation. The issue also underscores the broader debate over the regulation and oversight of autonomous vehicles, as well as the ethical implications of deploying such technology without fully addressing safety concerns. The outcome of these investigations could influence future policies and standards for self-driving cars, affecting the entire automotive industry.

What's Next?

Tesla may face increased regulatory scrutiny and potential legal challenges if the safety issues with its FSD software are not resolved. The company will likely need to address these concerns through software updates or additional safety measures. Meanwhile, competitors like Waymo, which reportedly do not face similar issues, may gain an advantage in the autonomous vehicle market. The ongoing dialogue between Tesla and regulatory bodies like the NHTSA will be crucial in determining the future of Tesla's self-driving technology and its acceptance by the public.