What's Happening?

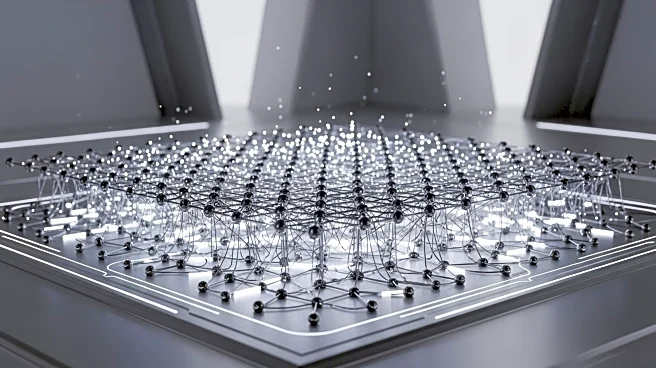

A research team from the Swiss Federal Institute of Technology in Lausanne has published a review in Nature on 'Physical Neural Networks' (PNNs), which utilize physical systems like light and electricity for computation. This approach aims to overcome the limitations of traditional digital GPUs by reducing latency and energy consumption in AI training and inference. The review explores various training techniques, including in silico training, physics-aware backpropagation, and feedback alignment, among others. PNNs are divided into isomorphic and broken-isomorphism categories, each with distinct computational methods. The research highlights the potential of PNNs to drive large-scale generative models and assist in local inference or intelligent sensors.

Why It's Important?

The development of Physical Neural Networks represents a significant shift in AI computing, potentially offering faster and more energy-efficient solutions compared to traditional digital systems. This could have profound implications for industries reliant on AI, such as healthcare and meteorology, by enabling more efficient data processing and model training. The shift away from GPU dependence could also lead to cost reductions and increased accessibility of AI technologies. However, challenges such as noise in computation and adaptation to analog hardware remain, requiring further research and development.

What's Next?

Future research will focus on overcoming challenges like computational noise and hardware adaptation. The commercial feasibility of PNNs depends on achieving energy efficiency significantly higher than digital devices. Researchers aim to design physical computers that address scale challenges through collaborative optimization of hardware and software. The development of robust training methods suitable for various scenarios will be crucial for PNNs to enter practical applications.

Beyond the Headlines

The exploration of PNNs could lead to a paradigm shift in AI computing, emphasizing the importance of analog systems in achieving computational efficiency. This may influence future AI infrastructure, encouraging a move towards scalable hardware that synergizes with advanced algorithms. The balance between neuromorphic and physical forms presents a core challenge, potentially inspiring new designs that mimic human brain characteristics while leveraging physical hardware.