What's Happening?

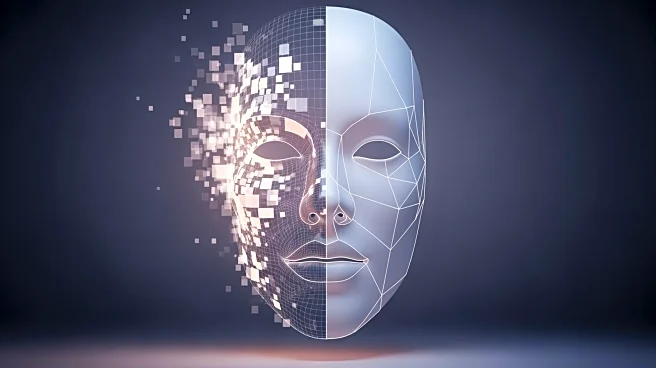

A recent study published in the journal Royal Society Open Science reveals that AI-generated faces have become indistinguishable from real human faces, posing potential risks for misuse. The study highlights the capabilities of generative adversarial

networks (GANs) in creating realistic synthetic faces, which could be used for deceptive purposes. Researchers found that without special training, people struggle to differentiate between AI-generated and real faces. To address this, a five-minute training regimen has been developed to help individuals identify AI-generated faces by spotting common glitches. This training significantly improved participants' ability to detect fake faces, suggesting a potential solution to the growing issue of AI impersonation.

Why It's Important?

The increasing realism of AI-generated faces presents significant challenges for digital security and authenticity verification. As these synthetic faces become more prevalent, they could be exploited for fraudulent activities, such as identity theft or misinformation campaigns. The study underscores the need for effective training programs to equip individuals with the skills to discern AI-generated content. This is particularly important as AI technologies continue to evolve and integrate into various aspects of daily life, including social media and digital communication. Ensuring the ability to identify AI-generated faces is crucial for maintaining trust and security in digital interactions.

What's Next?

The development of training programs to improve AI detection skills is likely to expand as awareness of the issue grows. Researchers may continue to refine these training methods to enhance their effectiveness and retention over time. Additionally, there could be increased collaboration between technology developers and security experts to create tools and systems that automatically detect AI-generated content. As the technology behind AI-generated faces advances, ongoing research and adaptation will be necessary to stay ahead of potential misuse. Public awareness campaigns may also play a role in educating users about the risks and detection of AI-generated content.