What's Happening?

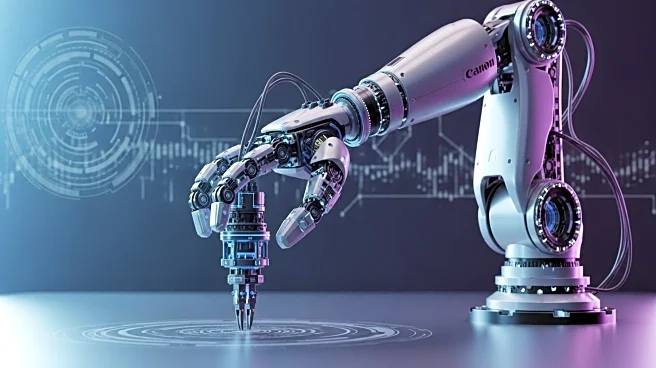

DeepMind has unveiled Gemini Robotics 1.5, a new vision-language-action (VLA) model designed to enhance robots' ability to perform complex tasks with autonomy and transparency. The system includes two models: Gemini Robotics 1.5, which translates visual input and instructions into motor commands, and Gemini Robotics-ER 1.5, an embodied reasoning model that plans and makes logical decisions. These models work together to improve robots' ability to generalize across diverse environments and tasks. Gemini Robotics 1.5 introduces reasoning steps before acting, allowing robots to explain their decision-making processes. The system also supports cross-embodiment learning, transferring skills between different robot forms. DeepMind emphasizes safety in developing embodied AI, incorporating semantic reasoning and collision-avoidance subsystems.

Why It's Important?

The launch of Gemini Robotics 1.5 represents a significant step toward developing general-purpose robots capable of reasoning, planning, and tool use. By moving beyond reactive systems, DeepMind aims to solve artificial general intelligence (AGI) in the physical world. This advancement could revolutionize industries by enabling robots to perform complex tasks autonomously, improving efficiency and reducing human error. The focus on safety and transparency in AI decision-making processes is crucial for gaining public trust and ensuring ethical deployment. As these technologies evolve, they may lead to new applications in sectors such as manufacturing, logistics, and healthcare, driving economic growth and innovation.