What's Happening?

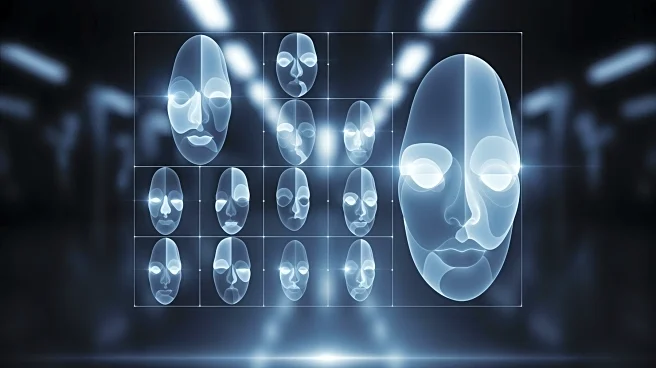

A recent study published in the journal Royal Society Open Science highlights the growing challenge of distinguishing AI-generated faces from real human faces. The research, led by Katie Gray, an associate professor in psychology at the University of Reading,

reveals that without specialized training, people struggle to differentiate between the two. The study involved experiments with 'typical recognizers' and 'super recognizers,' the latter being individuals with exceptional facial recognition skills. Initial tests showed that typical recognizers identified only 30% of AI-generated faces, while super recognizers identified 41%. However, after a brief five-minute training session designed to help participants spot common errors in AI-generated images, detection rates improved significantly. Super recognizers' accuracy increased to 64%, and typical recognizers' to 51%. The training focused on identifying glitches such as unnatural skin textures and disproportionate facial features.

Why It's Important?

The study underscores the potential risks associated with the increasing sophistication of AI-generated images, which can be used for deceptive purposes. As AI technology advances, the ability to create realistic synthetic faces poses challenges for security and privacy, particularly on social media platforms where such images can be used to mislead users. The findings highlight the need for effective training programs to equip individuals with the skills to identify AI-generated content, thereby reducing the risk of deception. This is crucial as AI-generated content becomes more prevalent, potentially impacting areas such as identity verification, online security, and the spread of misinformation.

What's Next?

The study suggests that further research is needed to assess the long-term effectiveness of the training and to develop more comprehensive programs that can be widely implemented. As AI technology continues to evolve, there may be a need for ongoing updates to training methods to keep pace with new developments. Additionally, there could be increased collaboration between researchers, technology companies, and policymakers to establish guidelines and tools for detecting AI-generated content. This could involve integrating detection capabilities into social media platforms and other digital services to help users identify and report suspicious content.