What's Happening?

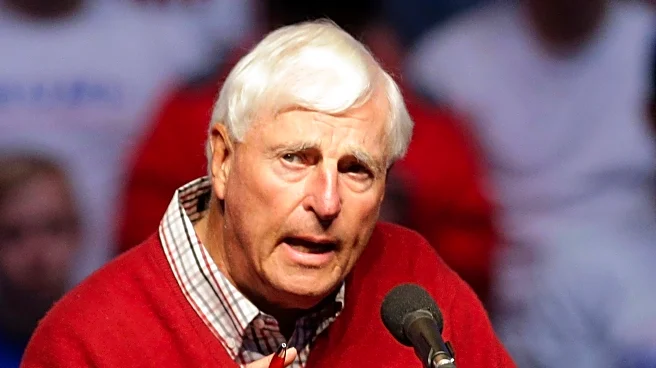

Andrej Karpathy, a founding member of OpenAI, has expressed skepticism about the current state of artificial intelligence, particularly regarding the development of artificial general intelligence (AGI).

In a recent interview with podcaster Dwarkesh Patel, Karpathy stated that the progress towards AGI is slower than the public perception suggests. Despite advancements in large language models, he believes AGI is still at least a decade away. Karpathy criticized the industry's tendency to overstate AI's capabilities, which he argues could harm the field. He highlighted that current AI models, while impressive, require significant improvements, especially in areas like long-horizon planning and structured reasoning. His comments have sparked discussions within the tech community, with some viewing his perspective as a potential reality check for the industry.

Why It's Important?

Karpathy's remarks are significant as they challenge the prevailing optimism in the tech industry about the rapid advancement of AI. His perspective suggests that the hype surrounding AI could lead to unrealistic expectations and potential setbacks if the technology fails to meet these expectations. This could impact investment strategies, research directions, and public policy related to AI development. Companies and stakeholders in the AI sector may need to recalibrate their timelines and expectations, focusing on addressing the fundamental challenges Karpathy highlighted. His comments also underscore the importance of developing reliable and safe AI systems, which is crucial for gaining public trust and ensuring the technology's long-term success.

What's Next?

Following Karpathy's critique, there may be increased scrutiny on AI projects and their claims. Stakeholders might push for more transparency and realistic assessments of AI capabilities. This could lead to a shift in research priorities, with a greater emphasis on solving the core technical challenges Karpathy mentioned. Additionally, there may be calls for improved safety practices and regulatory measures to ensure AI systems are developed responsibly. The tech community might also engage in more discussions about the ethical implications of AI and the need for robust frameworks to guide its development.