What's Happening?

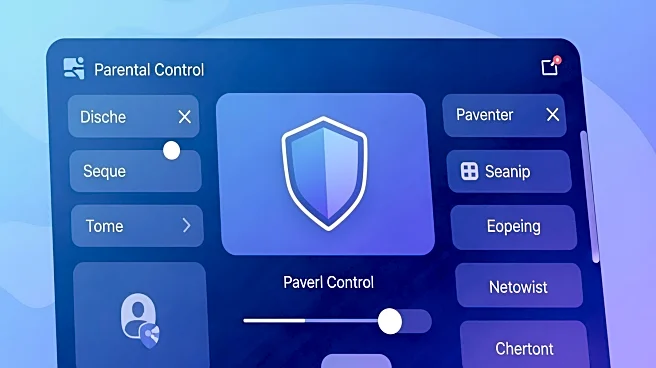

Meta has announced new parental control features for its AI experiences, aimed at enhancing teen safety on its platforms. These controls will allow parents to block certain AI characters and monitor conversation topics, with the option to turn off chats

entirely. The initiative is part of Meta's broader effort to ensure age-appropriate content for teens, adhering to a PG-13 movie rating standard. The controls are set to roll out on Instagram early next year, available in English in the U.S., U.K., Canada, and Australia. This move comes amid increasing concerns about the impact of social media on teen mental health and legal actions against AI companies related to teen suicides.

Why It's Important?

The introduction of these parental controls by Meta is significant as it addresses growing concerns about the mental health impact of social media on teenagers. By providing parents with tools to manage their children's interactions with AI, Meta aims to mitigate potential risks associated with exposure to inappropriate content. This initiative reflects a broader industry trend towards enhancing safety measures for young users, which could influence public policy and corporate practices in the tech sector. The move may also affect how other social media platforms approach teen safety, potentially leading to widespread changes in content moderation and user interaction policies.

What's Next?

Meta plans to implement these controls on Instagram early next year, with potential expansion to other platforms. As the rollout progresses, stakeholders such as parents, educators, and policymakers will likely monitor its effectiveness in safeguarding teen users. The initiative may prompt other tech companies to adopt similar measures, influencing industry standards for AI interactions. Additionally, ongoing legal challenges related to social media's impact on mental health could drive further regulatory scrutiny and policy development in this area.