What's Happening?

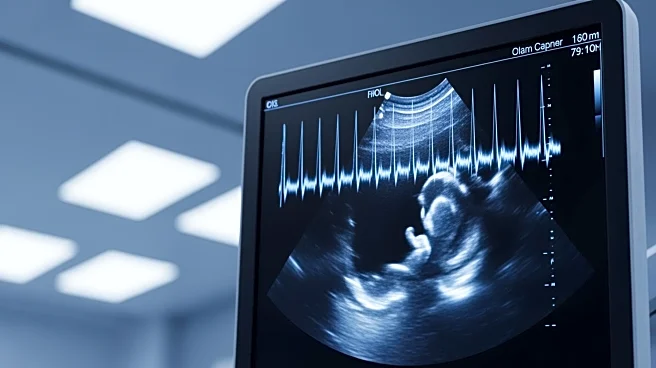

A new study has developed a visually grounded language model designed to improve the understanding of fetal ultrasound videos. This model, named Sonomate, aligns visual and textual data to assist sonographers

in interpreting ultrasound scans. The dataset used for training includes 525 video-audio pairs from the PULSE study, featuring scans performed by sonographers with varying levels of experience. The model addresses challenges such as heterogeneous language and asynchronous content, which are common in ultrasound procedures. By aligning visual and textual features, Sonomate enhances the interpretation of ultrasound data, potentially improving diagnostic accuracy and efficiency.

Why It's Important?

The development of Sonomate represents a significant advancement in medical imaging technology, particularly in the field of obstetrics. By improving the interpretation of ultrasound videos, this model can aid in more accurate and timely diagnoses, benefiting both healthcare providers and patients. The ability to align visual and textual data effectively could lead to better training tools for sonographers and improved patient outcomes. Additionally, the model's application in real-time ultrasound interpretation could streamline clinical workflows and reduce the cognitive load on healthcare professionals.

What's Next?

Future developments may focus on refining Sonomate's capabilities and expanding its application to other areas of medical imaging. Researchers may explore integrating the model with existing ultrasound machines to provide real-time assistance during scans. Further studies could also investigate the model's performance across diverse clinical settings and its adaptability to different ultrasound equipment. As the technology evolves, it may become a standard tool in obstetric care, enhancing the quality and efficiency of prenatal diagnostics.