What is the story about?

What's Happening?

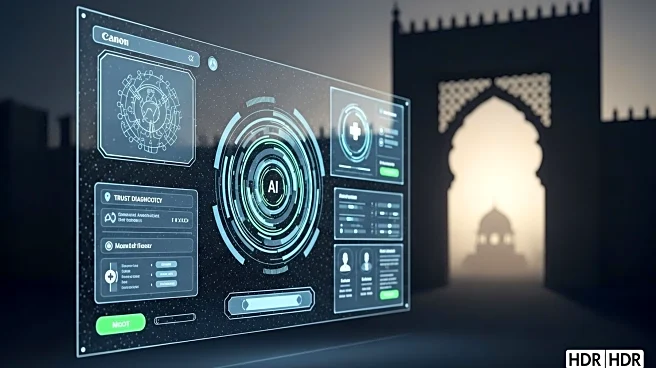

A recent study investigates the factors influencing user trust in AI systems, specifically focusing on ChatGPT within healthcare applications in Saudi Arabia. The study aims to understand how users perceive trust-related constructs such as competence, reliability, transparency, security, trustworthiness, non-manipulativeness, and persuasiveness in AI-assisted medical decision-making. The research highlights the importance of trust in AI-driven healthcare applications, as errors in AI-generated medical recommendations can have direct consequences on patient health and clinical decisions. The study emphasizes the need for AI systems to be reliable, secure, and transparent to foster trust among users, including patients and healthcare professionals.

Why It's Important?

The significance of this study lies in its potential to enhance trust and effectiveness in AI-powered healthcare systems. By understanding the factors that influence trust, AI developers, healthcare policymakers, and medical institutions can improve the reliability, security, transparency, and overall user satisfaction of AI systems like ChatGPT. Trust is a critical component in the adoption of AI in healthcare, affecting how users perceive and act on AI-generated medical insights. Enhancing trust in AI systems can lead to increased adoption rates, improved patient outcomes, and more efficient healthcare delivery.

What's Next?

The study provides practical recommendations for enhancing trust in AI-powered healthcare systems. These include improving the reliability, security, and transparency of AI systems, as well as ensuring compliance with global data protection regulations such as GDPR and HIPAA. The research suggests that AI systems should provide clear and explainable recommendations to foster trust among users. Additionally, the study highlights the need for ethical regulation of AI's persuasive capabilities to prevent undue influence over patient decisions.

Beyond the Headlines

The study raises ethical concerns related to bias, fairness, and accountability in AI-driven healthcare applications. It emphasizes the need for bias mitigation strategies, regulatory compliance, and clinician oversight to ensure responsible AI deployment in healthcare. The research also explores the potential for AI to replace human interaction in certain decision-making contexts, highlighting the importance of maintaining human oversight in AI-driven healthcare.