What's Happening?

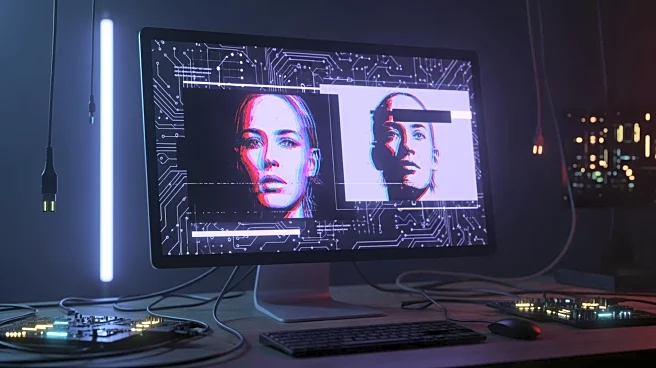

Following a U.S. military operation that led to the removal of Venezuelan leader Nicolás Maduro, AI-generated videos depicting celebrations in Venezuela have gone viral on social media platforms. These

videos, which show crowds purportedly celebrating Maduro's ousting, have been widely shared, amassing millions of views. However, these clips have been flagged as misleading, with fact-checkers identifying them as AI-generated. The videos were initially shared by accounts with large followings, including one named 'Wall Street Apes' on the platform X. Despite being flagged, the videos have been reshared extensively, including by notable figures such as Elon Musk, who later removed his repost. The rapid spread of these videos highlights the challenges social media platforms face in managing AI-generated misinformation, especially during fast-breaking news events.

Why It's Important?

The proliferation of AI-generated misinformation poses significant challenges for public trust and the integrity of information shared during critical events. The viral spread of these videos underscores the potential for AI technology to be used in amplifying false narratives, which can influence public perception and political discourse. This situation highlights the urgent need for effective detection and regulation of AI-generated content to prevent misuse. Social media platforms are under increasing pressure to implement robust measures to identify and label such content, as the realism and reach of AI-generated misinformation continue to grow. The incident also reflects broader concerns about the role of technology in shaping political narratives and the potential consequences for democratic processes.

What's Next?

In response to the growing threat of AI-generated misinformation, governments and social media companies are likely to intensify efforts to regulate and manage such content. Some countries, like India and Spain, have already proposed or implemented laws requiring the labeling of AI-generated materials. Social media platforms may enhance their AI detection and labeling tools, although the effectiveness of these measures remains uncertain. The ongoing development of AI technology suggests that platforms will need to continuously adapt their strategies to keep pace with advancements. Additionally, there may be increased collaboration between governments, tech companies, and civil society to address the ethical and legal implications of AI-generated misinformation.

Beyond the Headlines

The use of AI-generated content in political contexts raises ethical questions about the manipulation of public opinion and the potential erosion of trust in media. As AI technology becomes more sophisticated, distinguishing between real and fake content will become increasingly challenging, potentially leading to a broader societal impact on how information is consumed and trusted. This development may also prompt discussions about the responsibility of tech companies in preventing the spread of misinformation and the role of regulation in safeguarding democratic processes. The incident serves as a reminder of the need for media literacy and critical thinking skills among the public to navigate an increasingly complex information landscape.