What's Happening?

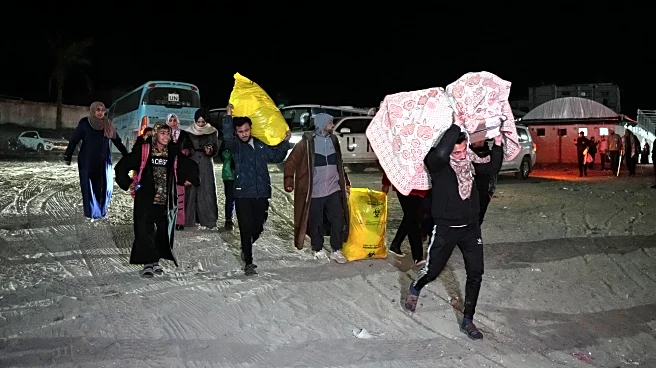

Elon Musk's AI model, Grok, on the social media platform X, will no longer be able to edit photos of real people to show them in revealing clothing in jurisdictions where it is illegal. This decision follows widespread concern over sexualized AI deepfakes.

The announcement on X stated that technological measures have been implemented to prevent the Grok account from allowing such edits. The UK government and other international leaders have criticized the feature, leading to bans in countries like Malaysia and Indonesia. California's top prosecutor is also investigating the spread of these AI-generated images. The changes will apply to all users, including paid subscribers, and aim to hold those who abuse the tool accountable.

Why It's Important?

The decision to restrict Grok's capabilities is significant as it addresses growing concerns over privacy and the ethical use of AI technology. The ability of AI to generate explicit images without consent poses serious legal and moral challenges, impacting individuals' rights and safety. This move by X reflects a broader push for accountability in tech companies' use of AI, especially in light of potential legal repercussions. The controversy highlights the need for robust regulations and ethical guidelines in AI development and deployment, affecting stakeholders across the tech industry, legal systems, and society at large.

What's Next?

The ongoing investigations by regulators like Ofcom in the UK and the California Attorney General's office may lead to further legal actions or fines against X if found in violation of laws. The platform's ability to self-regulate is under scrutiny, and failure to comply with legal standards could result in stricter regulations or even access restrictions in certain regions. The tech industry may see increased pressure to develop and implement ethical AI practices, potentially influencing future AI policy and innovation.