What's Happening?

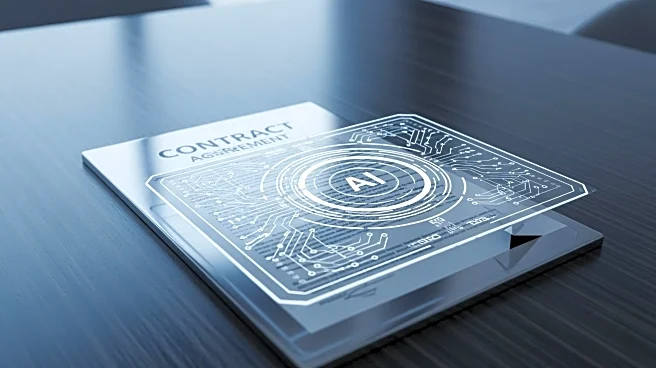

The Pentagon is contemplating terminating its $200 million contract with Anthropic, the developer of the Claude AI model, due to disagreements over the model's military applications. Anthropic has imposed limitations on the use of its AI technology, particularly

against mass surveillance and autonomous weaponry, which conflicts with the Pentagon's desire for more flexible use. The Claude AI model is currently the only one authorized for classified operations, but the Pentagon is exploring a unified agreement with other AI providers like Google, OpenAI, and xAI to ensure broader applicability under an 'all lawful purposes' standard.

Why It's Important?

This potential contract termination highlights the ongoing tension between ethical AI use and military needs. The Pentagon's push for unrestricted AI applications raises concerns about the ethical implications of AI in warfare and surveillance. The outcome of this dispute could set a precedent for how AI technologies are integrated into military operations, influencing future contracts and collaborations between the defense sector and AI companies. It also underscores the challenges of balancing national security interests with ethical considerations in AI deployment.

What's Next?

If the Pentagon decides to end its contract with Anthropic, it will need to find a replacement that meets its operational requirements. This could lead to increased competition among AI providers to secure military contracts, potentially driving innovation and adaptation of AI models for defense purposes. The decision may also prompt other AI companies to reevaluate their policies on military applications, influencing the broader industry approach to ethical AI use. Additionally, the Pentagon's move towards a unified agreement with multiple AI providers could streamline its AI operations and enhance interoperability across different models.