What's Happening?

AI-manipulated images and videos related to the fatal shooting of Alex Pretti by federal officers in Minneapolis have spread widely across social media platforms, blurring the line between reality and fiction.

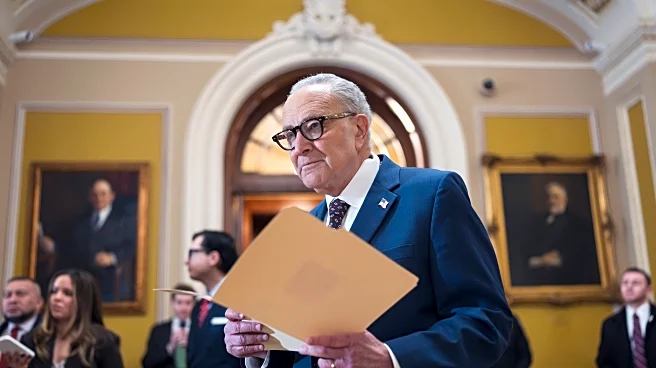

These AI-altered depictions have confused the public and added to the misinformation surrounding the incident. Some images, such as one showing an ICE officer without a head, have been widely circulated, even being used in a Senate speech by Sen. Dick Durbin, who was unaware of its inauthenticity. The proliferation of such media highlights the challenges posed by advanced AI technologies in verifying the authenticity of news content.

Why It's Important?

The spread of AI-generated misinformation poses significant challenges to public trust in media and the ability to discern fact from fiction. As AI technologies become more sophisticated, the potential for creating realistic yet false depictions increases, complicating efforts to maintain accurate public discourse. This phenomenon, known as the 'liar's dividend,' allows bad actors to dismiss genuine media as fake, undermining accountability and truth. The situation underscores the need for improved tools and strategies to detect and counteract AI-generated misinformation, which is crucial for maintaining informed and democratic societies.

What's Next?

Efforts to combat AI-generated misinformation will likely intensify, with technology companies and policymakers seeking solutions to identify and mitigate the impact of deepfakes. This may involve developing more advanced detection tools and implementing stricter regulations on the use of AI in media. Public awareness campaigns could also play a role in educating individuals about the potential for AI manipulation and how to critically evaluate media content. Collaboration between tech companies, governments, and civil society will be essential to address the challenges posed by AI in the information landscape.