What is the story about?

What's Happening?

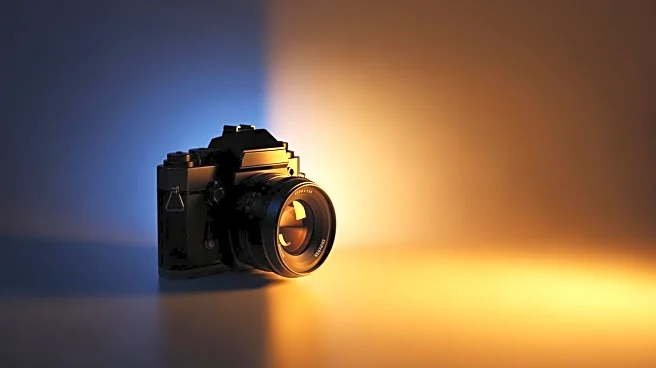

AI companies have downloaded over 15.8 million YouTube videos from more than 2 million channels without permission to train AI products. This includes nearly 1 million how-to videos, which are used in at least 13 different data sets distributed by tech companies, universities, and research organizations. The videos are often anonymized, lacking titles and creator names, and are extracted using unique identifiers. This practice violates YouTube's terms of service, yet the platform has not taken significant action to prevent it. The legality of using copyrighted videos for AI training is currently debated in ongoing lawsuits, with tech companies arguing that it constitutes 'fair use'.

Why It's Important?

The unauthorized use of YouTube videos for AI training poses significant challenges for content creators, potentially diminishing their motivation to share work on platforms like YouTube. As AI-generated content competes with human-made videos, creators may face difficulties in maintaining their audience and revenue. This development could lead to a shift in the online publishing landscape, where AI-generated content becomes more prevalent, impacting the livelihoods of creators and altering the dynamics of content creation and consumption.

What's Next?

Legal battles are expected to continue as courts determine whether AI training constitutes fair use of copyrighted material. The outcome of these cases could have profound implications for content creators and the AI industry. If tech companies are allowed to continue using creators' work without permission, it may lead to a decrease in original content on platforms like YouTube. Additionally, AI-generated videos are likely to become more mainstream, further challenging human creators.

Beyond the Headlines

The ethical implications of using creators' work without consent raise questions about the balance between technological advancement and intellectual property rights. As AI tools become more sophisticated, the potential for misuse in spreading misinformation and creating deepfakes increases, necessitating robust detection methods and legal frameworks to protect creators and consumers.