What is the story about?

What's Happening?

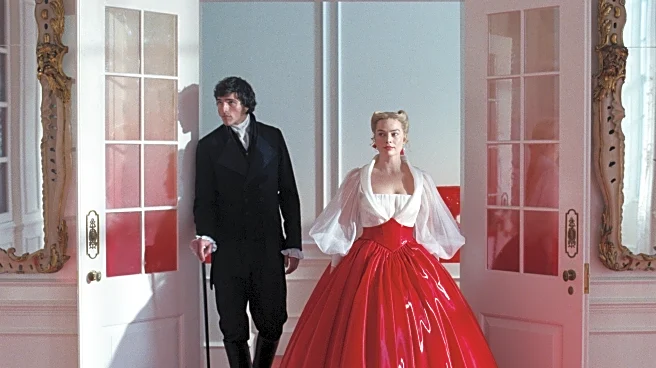

Recent developments in the use of generative artificial intelligence (AI) have raised significant concerns within the scientific community, particularly in the field of nanomaterials research. A comment article highlights the ability of AI to create fake microscopy images that are virtually indistinguishable from real ones. This capability poses a risk of image fabrication in scientific papers, potentially undermining the integrity of published research. The article emphasizes the need for education and a strong lab culture to prevent unethical practices, suggesting that research integrity courses should be mandatory in PhD programs. Additionally, AI tools are being employed by publishers to detect image manipulation, although peer review processes are not designed to catch such fraud.

Why It's Important?

The potential misuse of AI-generated images in scientific research could have far-reaching implications for the credibility of scientific publications. If unchecked, this could lead to a loss of trust in scientific findings, affecting industries that rely on accurate and reliable research, such as pharmaceuticals, materials science, and technology development. The issue underscores the importance of maintaining rigorous ethical standards and the role of publishers, universities, and researchers in safeguarding the integrity of scientific work. The collaboration between AI tool developers and science integrity experts is crucial to developing effective detection methods and maintaining trust in scientific processes.

What's Next?

The scientific community is likely to see increased efforts to integrate AI tools for detecting image fabrication into the publication process. Publishers may enhance their screening procedures, and educational institutions might introduce or expand research integrity training. There could also be a push for international collaboration to establish standardized ethical guidelines for the use of AI in research. As AI technology continues to evolve, ongoing dialogue and cooperation among stakeholders will be essential to address emerging challenges and ensure the responsible use of AI in science.

Beyond the Headlines

The ethical implications of AI-generated images extend beyond immediate concerns of research integrity. They highlight broader issues of trust and accountability in the digital age, where technology can easily blur the lines between reality and fabrication. This development may prompt a reevaluation of how scientific data is verified and shared, potentially leading to new norms and practices in scientific communication. The situation also raises questions about the role of AI in creative and intellectual endeavors, challenging traditional notions of authorship and originality.