What's Happening?

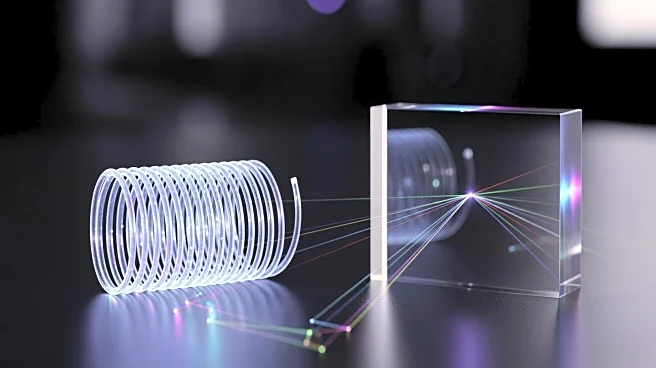

Researchers at Aalto University, led by Dr. Yufeng Zhang, have developed a new method for performing complex tensor operations using light, rather than traditional electronic circuits. This approach, known as single-shot tensor computing, allows for computations

to be completed at the speed of light, offering a significant advancement in AI processing capabilities. The technique involves encoding digital information into the amplitude and phase of light waves, enabling simultaneous mathematical operations such as matrix and tensor multiplication. This method promises to enhance AI tasks with lower power consumption and greater efficiency.

Why It's Important?

The development of light-based computing methods could revolutionize the AI industry by significantly increasing processing speed and reducing energy consumption. As AI systems become more integral to various sectors, including image processing and language understanding, the ability to perform complex calculations quickly and efficiently is crucial. This advancement could lead to more scalable AI solutions, benefiting industries reliant on large-scale data processing. Additionally, integrating this method into existing hardware could accelerate AI tasks across multiple fields, potentially transforming how businesses and technologies operate.

What's Next?

The research team plans to integrate this computational framework onto photonic chips, enabling light-based processors to perform complex AI tasks with extremely low power consumption. Dr. Zhang estimates that this method could be incorporated into existing systems within 3 to 5 years, paving the way for a new generation of optical computing systems. This development could lead to widespread adoption in major technology companies, further enhancing AI capabilities and applications.

Beyond the Headlines

This innovation highlights the potential for light-based computing to address current limitations in digital hardware, such as speed and energy use. By leveraging the physical properties of light, researchers are exploring new frontiers in computing technology, which could have long-term implications for the efficiency and scalability of AI systems.