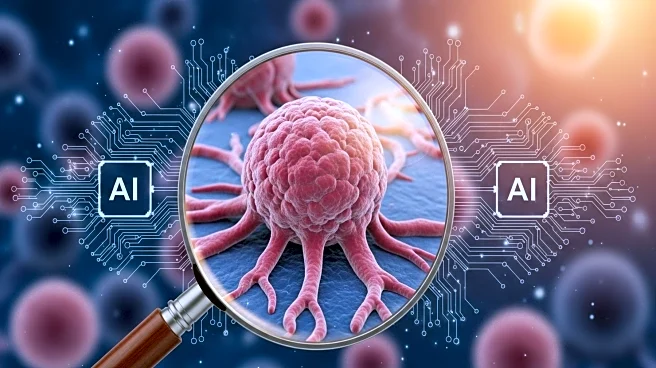

What's Happening?

A new AI system has been developed to improve the accuracy and explainability of Gleason grading in prostate cancer. This system utilizes a soft label approach to capture the intrinsic uncertainty in training data, enhancing segmentation performance compared to traditional methods. The AI model, known as Gleason XAI, employs a concept bottleneck strategy to predict explanations directly for each pixel, allowing for remapping to corresponding Gleason patterns. This approach provides inherent explainability by basing decisions on expert-verified explanations rather than black box models. The development involved collaboration with pathologists to create a comprehensive medical ontology for Gleason patterns, ensuring the model's decisions can be verified by experts.

Why It's Important?

The development of an explainable AI system for Gleason grading in prostate cancer is significant as it addresses the high interobserver variability in traditional grading methods. By providing a more accurate and interpretable model, this system has the potential to improve diagnostic precision and patient outcomes. The use of soft labels preserves human uncertainty, which is crucial in medical diagnostics where variability can impact treatment decisions. This advancement could lead to more standardized grading practices, reducing discrepancies and enhancing the reliability of prostate cancer diagnoses across different medical institutions.

What's Next?

The next steps involve further validation and potential integration of the Gleason XAI system into clinical practice. This could include training more pathologists on the system and conducting larger-scale studies to confirm its efficacy in diverse clinical settings. Additionally, the model's framework could be adapted for other types of cancer grading, expanding its utility in oncology. Stakeholders such as medical institutions and AI developers may collaborate to refine the system and explore its application in other diagnostic areas.

Beyond the Headlines

The ethical implications of using AI in medical diagnostics are profound, as it shifts some decision-making from human experts to algorithmic models. Ensuring transparency and accountability in AI-driven diagnoses is crucial to maintaining trust in medical technology. Furthermore, the development of explainable AI models like Gleason XAI highlights the importance of interdisciplinary collaboration in healthcare innovation, combining expertise from pathology, AI, and medical ethics to create systems that are both effective and ethically sound.