What is the story about?

What's Happening?

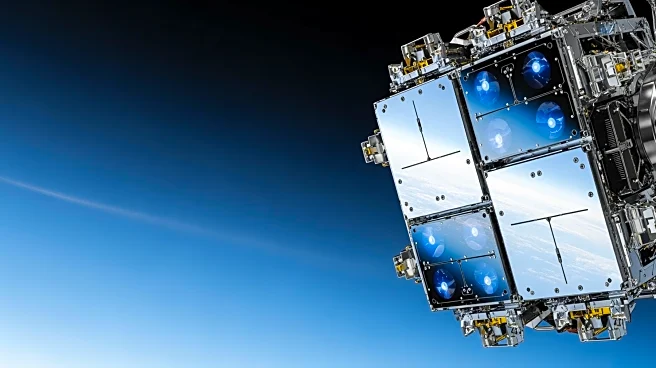

Agentic AI is emerging as a significant development in the cybersecurity landscape, offering both opportunities and challenges. Diana Kelley, Chief Information Security Officer at Noma Security, discusses the potential of agentic AI, which combines software and language models to autonomously make decisions and act towards defined goals. This technology is particularly valuable in industries where speed and context are critical, such as financial services for fraud detection and manufacturing for supply chain optimization. In cybersecurity, agentic AI is being explored to enhance real-time threat detection and automate remediation actions. However, the adoption of agentic AI comes with risks, including over-reliance on autonomous systems, data loss, and potential regulatory violations.

Why It's Important?

The integration of agentic AI into cybersecurity and other industries could lead to significant efficiency gains, improved accuracy, and faster problem resolution. By automating processes that previously required manual intervention, organizations can enhance their resilience and response capabilities. However, the risks associated with agentic AI, such as data breaches and system outages, highlight the need for robust governance and oversight. Ensuring transparency and accountability in AI systems is crucial to prevent reputational damage and maintain trust with stakeholders. As agentic AI continues to evolve, organizations must balance the benefits with the potential downsides to leverage its full potential responsibly.

What's Next?

Organizations looking to adopt agentic AI should focus on establishing governance and risk management frameworks aligned with industry standards and regulations. Conducting inventories of AI usage and engaging with business owners to understand their objectives can facilitate responsible adoption. Pilot deployments with red-team testing and ongoing monitoring can help identify weaknesses early. Building trust with employees, customers, and regulators through openness about AI decision-making processes is essential. As agentic AI technology advances, organizations must remain vigilant in ensuring that AI systems amplify human judgment rather than replace it.

Beyond the Headlines

The ethical and regulatory implications of agentic AI are significant, as organizations must navigate potential biases and adversarial attacks. Inclusion of diverse perspectives in AI design and oversight can reduce blind spots and enhance system resilience. The success of agentic AI depends not only on technological advancements but also on fostering collaboration and inclusion within organizations. As AI becomes more integrated into decision-making processes, maintaining human oversight and accountability will be crucial to prevent unintended consequences.