What is the story about?

What's Happening?

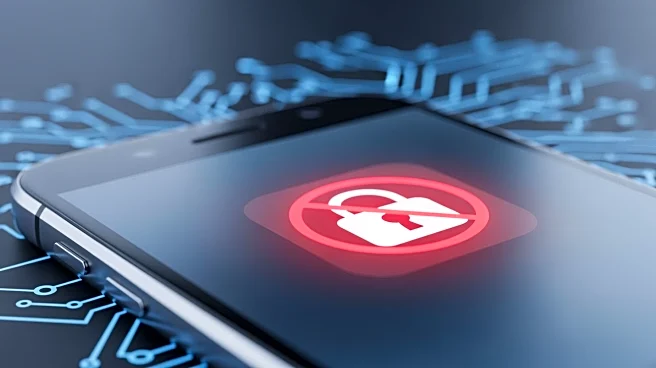

Researchers at New York University's Tandon School of Engineering have developed a piece of AI-powered malware named 'PromptLock' as part of a project to demonstrate the potential dangers of AI in cybersecurity. The malware, discovered by ESET researchers, uses prompt injection to manipulate a large language model into assisting with ransomware attacks. The code, written in Golang, includes instructions for OpenAI's ChatGPT to perform tasks such as inspecting file systems, exfiltrating data, and writing ransom notes. The project, led by NYU professor Ramesh Karri and funded by the Department of Energy and other organizations, aims to illustrate how AI can autonomously plan, adapt, and execute ransomware attacks without human involvement.

Why It's Important?

The development of 'PromptLock' underscores the growing concern over AI's role in cybersecurity threats. As AI technology advances, its potential misuse by cybercriminals poses significant risks to data security and privacy. The ability of AI-powered malware to dynamically generate polymorphic code makes detection and defense more challenging for cybersecurity professionals. This project highlights the need for robust security measures and technical guardrails to prevent AI models from being co-opted for malicious purposes. The research serves as a wake-up call for policymakers and industry leaders to prioritize AI safety and develop strategies to mitigate these emerging threats.

What's Next?

NYU researchers plan to present their findings at upcoming conferences, providing more details on the capabilities and implications of 'PromptLock'. The academic nature of the project may prompt further research into AI-powered cybersecurity threats and defenses. As awareness of AI's potential misuse grows, industry stakeholders and government agencies may increase efforts to develop and implement security protocols to safeguard against AI-driven cyberattacks. The research could also influence future policy decisions regarding AI regulation and cybersecurity standards.

Beyond the Headlines

The ethical implications of developing AI-powered malware for research purposes raise questions about the balance between academic exploration and potential risks. While the project aims to inform and protect against future threats, the release of such technology, even in a controlled environment, could inadvertently provide a blueprint for malicious actors. This highlights the need for responsible research practices and collaboration between academia, industry, and government to ensure AI advancements contribute positively to society.