What's Happening?

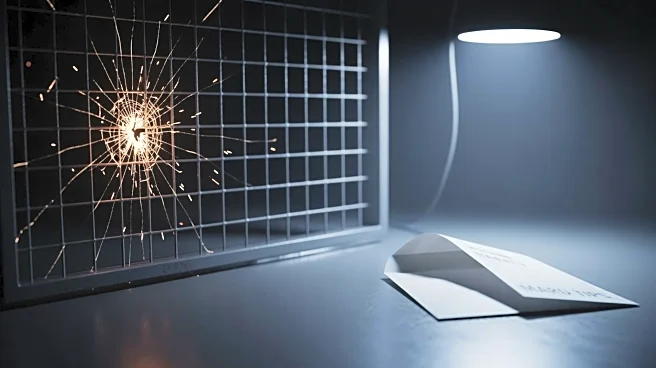

Data poisoning is emerging as a significant threat to public sector AI systems, where false or manipulated data is introduced to skew analytics and decision-making processes. This malicious practice can lead to incorrect conclusions, affecting policy,

budgets, and services. Experts express concern over its potential to influence high-stakes areas such as fraud detection, cybersecurity, and health-related analysis. The manipulation of data can cause AI models to misinterpret inputs, leading to misclassifications and overlooked risks. This issue is particularly challenging as the effects of data poisoning can persist even as models are updated or retrained. The complexity of integrated data flows in public services, such as health and law enforcement, makes oversight difficult, increasing the risk of unnoticed manipulation.

Why It's Important?

The implications of data poisoning are profound, as it can undermine the integrity of AI systems that many public sector agencies rely on for critical decision-making. By distorting data, attackers can redirect resources away from genuine high-risk areas, potentially leading to inefficient or harmful outcomes. This threat highlights the need for robust data governance and security measures to protect the integrity of AI models. As AI becomes more integrated into public sector operations, ensuring the accuracy and reliability of data is crucial to maintaining public trust and effective service delivery. The potential for nation-state actors to exploit data poisoning for influence campaigns further underscores the geopolitical risks associated with this issue.

What's Next?

To combat data poisoning, public sector organizations must enhance their data governance frameworks, ensuring strong oversight and cross-checks to detect and mitigate manipulation. Collaboration between data scientists, programmers, and analysts is essential to understand data flows and identify potential vulnerabilities. As AI security becomes a critical concern, agencies must focus on tracking data sources, understanding data supply chains, and implementing versioning to detect alterations. The development of automated techniques to safeguard data integrity will be vital in protecting AI systems from manipulation. Ongoing research and investment in AI security will be necessary to address this evolving threat landscape.

Beyond the Headlines

Data poisoning raises ethical and legal questions about the responsibility of organizations to ensure the accuracy of their AI systems. The potential for manipulated data to influence public policy and services highlights the need for transparency and accountability in AI deployment. As AI systems increasingly inform individual and institutional decisions, the integrity of the data they rely on becomes a matter of public interest. The challenge of detecting and remediating data poisoning underscores the importance of developing robust AI governance frameworks that prioritize data integrity and security.