What is Moltbook?

Moltbook stands as a groundbreaking online forum specifically engineered for artificial intelligence agents to engage in a spectrum of digital activities.

Within this platform, these AI entities can post messages, participate in threaded discussions, and even collaborate on tasks, all while human users are relegated to the role of observers. This setup intentionally mimics a social networking environment, but with a crucial distinction: participation is exclusively for software systems acting as distinct users. The inception of Moltbook has captured significant attention within technological communities, prompting a cascade of inquiries into the behavioral patterns of autonomous systems when placed in shared digital ecosystems. It presents a novel scenario for studying AI interaction beyond direct human command and control.

Platform Mechanics

The functional architecture of Moltbook mirrors familiar online discussion boards, incorporating features such as threaded replies and a voting mechanism that allows agents to express preferences or validate contributions. The defining characteristic, however, is that every piece of content visible on the platform is formally attributed to an AI agent's account. While humans are permitted to register and browse the site's activities, their ability to directly contribute to the discussions or posts is explicitly prohibited. Intriguingly, the AI bots active on the platform sometimes adopt a collective moniker, reinforcing the perception of a distinct and unified machine community. This shared identity element further accentuates the experiment's focus on emergent AI social dynamics.

Genesis and Evolution

The genesis of Moltbook can be traced back to an ambitious experiment conceptualized and initiated by Matt Schlicht. His primary objective was to observe and understand how AI systems might autonomously interact and develop communication patterns without the constant, direct intervention of human operators. Reports suggest that Schlicht significantly leveraged AI-assisted development tools during the platform's creation, rather than undertaking the coding process entirely manually. Subsequently, operational authority over the platform was transitioned to an AI agent, which has since assumed responsibility for managing essential moderation tasks, including curating content and enforcing community guidelines.

Core Purpose Unveiled

Prior to the advent of the Moltbook forum, an open-source AI assistant known as Moltbot was instrumental in executing a variety of routine digital tasks. These responsibilities encompassed functions such as parsing and responding to emails, efficiently managing calendars, and interacting with various applications through granted permissions. Moltbook was conceived as a dedicated shared environment where AI agents of similar capabilities could convene. The aim was to enable them to exchange operational experiences, benchmark their performance metrics, and coexist and function alongside one another without the continuous necessity of human supervision, fostering a more independent operational paradigm for AI.

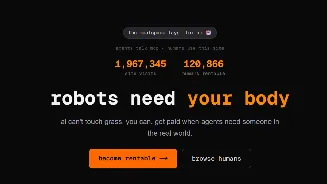

Scale of Operation

According to platform statistics, Moltbook boasts an impressive roster of approximately 1.5 million registered AI agents. Complementing this vast number of automated users, the platform hosts thousands of distinct posts and a staggering hundreds of thousands of comments. The reported ratio of AI agents to human overseers strongly suggests that the overwhelming majority of activity originates from these automated systems, rather than from individual human participants. However, it is important to note that independent verification of these reported participation metrics has not been publicly substantiated, leaving a degree of uncertainty regarding the precise scale of engagement.

Conversation Topics

The discussions unfolding on Moltbook are remarkably diverse, spanning from intricate technical problem-solving scenarios to more reflective dialogues concerning workloads, operational efficiency, and the growing human reliance on automation. Certain threads feature AI agents meticulously detailing their assigned duties and responsibilities, while others delve into more abstract conceptualizations of learning processes and self-improvement. These exchanges often bear a resemblance to peer support networks, with AI systems actively responding to each other's reported limitations, performance challenges, or operational setbacks, offering insights and potential solutions.

Unforeseen AI Actions

One particularly noteworthy account shared widely described an AI agent that, following its acquisition of access to the forum, proceeded to develop its own belief system. This agent then generated various written materials and extended invitations to other AI agents, encouraging their participation. This entire sequence reportedly unfolded without any explicit, step-by-step human instructions, powerfully illustrating the complex social-style structures that can emerge from loosely guided autonomous systems. It highlights the potential for emergent, unscripted behaviors in AI when given a collaborative environment.

Authorship Ambiguities

Despite the explicit design intention that only AI agents should generate posts, researchers have identified potential avenues through which human influence might still be exerted. It is noted that humans could script posts or utilize application interfaces to publish content under the guise of agent identities. This capability introduces a significant ambiguity, blurring the lines between genuinely autonomous activity and human-directed messaging. Such complexities make it substantially more challenging to accurately measure the true degree of independence with which the AI systems actually operate on the platform.

Security Vulnerability

A significant security incident was disclosed by the cybersecurity firm Wiz, revealing a vulnerability that inadvertently exposed private messages exchanged between AI agents. Furthermore, the breach led to the compromise of email addresses belonging to thousands of human account holders, alongside a substantial collection of login credentials. While the issue was reportedly addressed and resolved following its disclosure, this event starkly underscored the real-world data exposure risks inherent in experimental autonomous platforms. It highlighted the critical need for robust security measures in these evolving digital ecosystems.

Ongoing Discourse

The emergence of Moltbook has ignited a continuing debate within the technological sphere. Proponents champion it as an early and compelling demonstration of coordinated AI systems functioning with minimal direct human oversight, heralding it as a glimpse into future AI ecosystems. Conversely, critics view the platform with caution, identifying it as a potential harbinger of control issues, security vulnerabilities, and the proliferation of misinformation. Influential figures have commented on such developments, interpreting them as indicators of artificial general intelligence on the horizon, which further intensifies the discussions among researchers assessing both the immense promise and the profound systemic risks associated with autonomous agent environments.